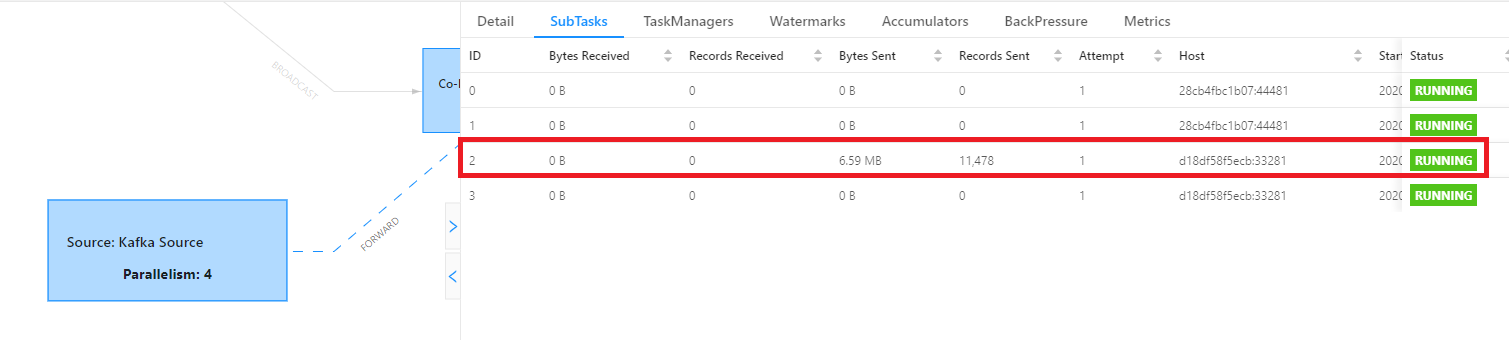

Something interesting found when running Flink job that consumes Kafka stream data as a source. No matter how much parallelism set there is always 1 task manager consuming data from Kafka.

Findings

Flink creates consumer depends on the number of parallelism user set. When Flink consumers that created is more than Kafka partition, some Flink consumers will idle!

The problem is in Kafka. The topic partition created by default is 1. By adding Kafka topic partitions that match Flink parallelism will solve this issue.

There is 3 possible scenario cause by number of Kafka partition and number of Flink parallelism :

-

Kafka partitions == Flink parallelism This case is ideal, since each consumer takes care of one partition. If your messages are balanced between partitions, the work will be evenly spread across Flink operators

-

Kafka partitions < Flink parallelism Some Flink instances won’t receive any messages.

-

Kafka partitions > Flink parallelism Some instances will handle multiple partitions.

Learning:

Always make sure Kafka topic partition >= Flink parallelism.